I am a machine learning researcher focused on developing trustworthy, interpretable, and socially beneficial AI systems.

Currently, I'm a scholar in the ML Alignment & Theory Scholars (MATS) Program, working on Scalable Oversight and AI Control with David Lindner, Roland Zimmermann, and Scott Emmons.

My previous research includes work on the reliability of steering vectors in Large Language Models with Dmitrii Krasheninnikov and David Krueger at the Krueger AI Safety Lab at the University of Cambridge. I have also worked on applications of representation engineering at the Health-NLP group, supervised by Seyed Ali Bahrainian and Carsten Eickhoff.

I am passionate about public debate and democratic discourse to shape informed perspectives and drive effective policy.

I hold a Master’s degree in Machine Learning and a Bachelor’s degree in Computer Science from the University of Tübingen. My research sits at the intersection of Deep Learning and Natural Language Processing, motivated by the challenge of building safe and equitable AI systems. As AI technologies improve, I aim to help address societal risks and promote equitable benefits by advancing scientific understanding and supporting effective governance.

Feel free to reach out to me via mail!

Publications

Joschka Braun, Carsten Eickhoff, Seyed Ali Bahrainian

19th Conference of the European Chapter of the Association for Computational Linguistics, 2026

Paper / Poster / Code /

@InProceedings{braun2026beyond,

author = {Joschka Braun and Carsten Eickhoff and Seyed Ali Bahrainian},

title = {Beyond Multiple Choice: Evaluating Steering Vectors for Summarization},

booktitle = {19th Conference of the European Chapter of the Association for Computational Linguistics},

year = {2026},

}

Joschka Braun*, Yeonwoo Jang*, Damon Falck*, Roland S. Zimmermann, David Lindner, Scott Emmons

NeurIPS 2025 Workshop on Biosecurity Safeguards for Generative AI (Oral, Best Paper Runner-Up), 2025

Paper /

@InProceedings{braun2025_resisting,

author = {Joschka Braun* and Yeonwoo Jang* and Damon Falck* and Roland S. Zimmermann and David Lindner and Scott Emmons},

title = {Resisting RL Elicitation of Biosecurity Capabilities: Reasoning Models Exploration Hacking on WMDP},

booktitle = {NeurIPS 2025 Workshop on Biosecurity Safeguards for Generative AI (Oral, Best Paper Runner-Up)},

year = {2025},

}

Joschka Braun

MSc Thesis, University of Tübingen, 2026

Paper / Code /

@InProceedings{braun2026understandingunreliabilitysteeringvectors,

author = {Joschka Braun},

title = {Understanding Unreliability of Steering Vectors in Language Models: Geometric Predictors and the Limits of Linear Approximations},

booktitle = {MSc Thesis, University of Tübingen},

year = {2026},

eprint = {2602.17881},

}

Joschka Braun, Carsten Eickhoff, Seyed Ali Bahrainian

ICML 2025 Workshop on Reliable and Responsible Foundation Models, 2025

Paper / Poster / Code / arXiv /

@InProceedings{braun2025beyond,

author = {Joschka Braun and Carsten Eickhoff and Seyed Ali Bahrainian},

title = {Beyond Multiple Choice: Evaluating Steering Vectors for Adaptive Free-Form Summarization},

booktitle = {ICML 2025 Workshop on Reliable and Responsible Foundation Models},

year = {2025},

eprint = {2505.24859},

}

Joschka Braun, Carsten Eickhoff, David Krueger, Seyed Ali Bahrainian, Dmitrii Krasheninnikov

ICLR 2025 Workshop on Foundation Models in the Wild, 2025

Paper / arXiv /

@InProceedings{braun2025understanding,

author = {Joschka Braun and Carsten Eickhoff and David Krueger and Seyed Ali Bahrainian and Dmitrii Krasheninnikov},

title = {Understanding (Un)Reliability of Steering Vectors in Language Models},

booktitle = {ICLR 2025 Workshop on Foundation Models in the Wild},

year = {2025},

eprint = {2505.22637},

}

Joschka Braun, Bálint Mucsányi, Seyed Ali Bahrainian

Implemented a custom LogitsProcessor class to reweight logits of topic-relevant tokens during summary generation. Evaluated and compared different strategies on the NEWTS dataset., 2024

Paper / Code / arXiv /

@InProceedings{Braun_Reweighting_Logits_2024,

author = {Joschka Braun and Bálint Mucsányi and Seyed Ali Bahrainian},

title = {Logit Reweighting for Topic-Focused Summarization},

booktitle = {Implemented a custom LogitsProcessor class to reweight logits of topic-relevant tokens during summary generation. Evaluated and compared different strategies on the NEWTS dataset.},

year = {2024},

eprint = {2507.05235},

}

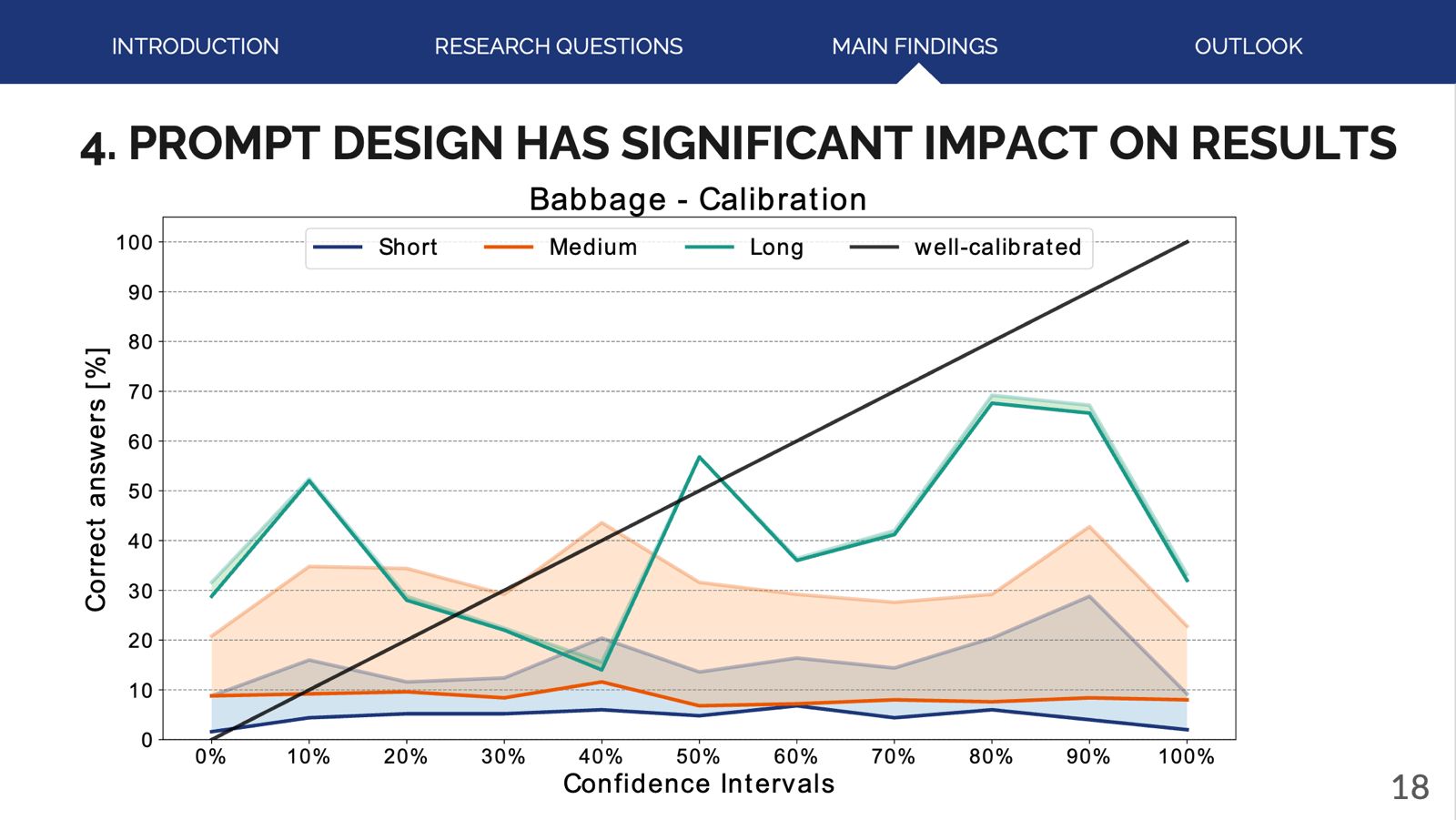

Joschka Braun

BSc Thesis at Univesity of Tübingen, 2022

Paper / Slides /

@InProceedings{Braun2022BSCTHESIS,

author = {Joschka Braun},

title = {Verbal Epistemic Uncertainty Estimation for Numeric Values with GPT-3},

booktitle = {BSc Thesis at Univesity of Tübingen},

year = {2022},

}Talks

University of Tübingen, 2022

Slides

Public Debates

I debated risk-based AI regulation with Ina Brandes, Minister for Culture and Science of North Rhine-Westphalia, as part of the RWI-Wirtschaftsgespräch 2023. Our discussion centered on balancing innovation and societal-scale risks to ensure artificial intelligence provides a net benefit to society.

Video / Link

With my teammate Dominik Hermle, I defended the 2020 German Debating Championship (DDM) title, becoming the first team in the event's 20-year history to win in consecutive years. The final round topic was "Should Western countries no longer intervene militarily abroad?"

Video / Link

Miscellaneous

Joschka Braun, Damon Falck, Yeonwoo Jang

We formalize and decompose exploration hacking, where models strategically alter their exploration to resist RL training, presenting a conceptual framework and open problems ahead of our upcoming empirical paper., 2026

Project Page /

@InProceedings{Braun_Exploration_Hacking_Blog_Post_2026,

author = {Joschka Braun and Damon Falck and Yeonwoo Jang},

title = {A Conceptual Framework for Exploration Hacking},

booktitle = {We formalize and decompose exploration hacking, where models strategically alter their exploration to resist RL training, presenting a conceptual framework and open problems ahead of our upcoming empirical paper.},

year = {2026},

}

Damon Falck, Joschka Braun, Yeonwoo Jang

Exploration hacking, where models subvert their RL training by selectively under-exploring, could threaten both the development of beneficial capabilities and the effectiveness of safety training., 2025

Project Page /

@InProceedings{Braun_Exploration_Hacking_Blog_Post_2025,

author = {Damon Falck and Joschka Braun and Yeonwoo Jang},

title = {Exploration hacking: can reasoning models subvert RL?},

booktitle = {Exploration hacking, where models subvert their RL training by selectively under-exploring, could threaten both the development of beneficial capabilities and the effectiveness of safety training.},

year = {2025},

}

Joschka Braun, Damon Falck, Yeonwoo Jang

Participated in the June 2025 Anthropic Alignment Hackathon in San Francisco, co-developing an "Exploration Hacking" prototype over two days alongside Damon Falck and Yeonwoo Jang., 2025

Slides /

@InProceedings{Braun_Anthropic_Hackathon_2025,

author = {Joschka Braun and Damon Falck and Yeonwoo Jang},

title = {Anthropic Alignment Hackathon: Exploration Hacking},

booktitle = {Participated in the June 2025 Anthropic Alignment Hackathon in San Francisco, co-developing an "Exploration Hacking" prototype over two days alongside Damon Falck and Yeonwoo Jang.},

year = {2025},

}

David Rein al.

Acknowledged Contributor to HCAST benchmark: Contributed task feedback and set human performance baselines for ML Engineering tasks., 2025

Project Page / arXiv /

@InProceedings{rein2025hcasthumancalibratedautonomysoftware,

author = {David Rein al.},

title = {HCAST: Human-Calibrated Autonomy Software Tasks},

booktitle = {Acknowledged Contributor to HCAST benchmark: Contributed task feedback and set human performance baselines for ML Engineering tasks.},

year = {2025},

eprint = {2503.17354},

}

Joschka Braun, Dmitrii Krasheninnikov, Usman Anwar, Robert Kirk, Daniel Tan, David Krueger

Blog post on the key challenges in controlling LLM behaviour with steering vectors. Published on The Alignment Forum., 2024

Project Page / Paper /

@InProceedings{Braun_Steering_Blog_Post_2024,

author = {Joschka Braun and Dmitrii Krasheninnikov and Usman Anwar and Robert Kirk and Daniel Tan and David Krueger},

title = {A Sober Look at Steering Vectors for LLMs},

booktitle = {Blog post on the key challenges in controlling LLM behaviour with steering vectors. Published on The Alignment Forum.},

year = {2024},

}Homepage Template

This website is based on the template of Michael Niemeyer. Check out his Github repository for instructions on how to use it.